Morning Brew: A Triple Shot of Today's Hottest Topics ☕🌞

Good morning, readers! Today, we're serving a triple-shot espresso of tech and trends that'll perk up your day faster than your morning coffee!

First up, Apple's M2 MacBook Air is under the cybersecurity spotlight 🔦🍏. A new GPU vulnerability, charmingly named LeftoverLocals, has been detected, affecting not just Apple, but also Qualcomm and AMD devices. While patches are rolling out, Apple users might want to keep an eye on their devices.

Next, we're talking about AI with a twist. The ConstitutionMaker tool 🤖📜 is reshaping how we interact with AI chatbots, turning user feedback into a set of principles to guide these digital genies. It's like having a magic lamp, but the genie also takes constructive criticism.

And for our final scoop, we are talking Control TCP Retransmissions: Early Issue Detection to Prevent Data Loss

Apple's GPU Glitch: An error in the Armor?

Apple recently confirmed a GPU vulnerability, dubbed LeftoverLocals, in its M2 MacBook Air, potentially affecting millions of devices. This flaw, also found in products by Qualcomm, AMD, and Imagination, lets attackers access data remnants from GPU processing. The issue is especially critical for AI chatbot queries and other machine learning models.

Security Breach: Qualcomm has already released a patch, while AMD's fix is due in March. Apple's latest M3 and A17 processors in the iPhone 15 Pro and new MacBook Pro laptops are safe, but the M2 MacBook Air and iPhone 12 remain vulnerable.

The Apple Conundrum: Apple's choice to prioritize its Apple Silicon inventory for the iPhone 15 Pro series meant no simultaneous launch of a consumer-focused MacBook Air. This decision, along with the widespread use of affected devices, could impact hundreds of millions of users worldwide.

Zooming Out: The revelation of LeftoverLocals underscores the ever-present risks in the tech world, reminding users and manufacturers alike of the importance of proactive security measures.

Refining Chatbots: The ConstitutionMaker Way

In the evolving world of AI, the new ConstitutionMaker tool is a game-changer for customizing large language models (LLMs). It allows users to shape chatbot responses by converting their feedback into guiding principles. This approach is pivotal for refining the interaction with AI-driven chatbots.

User Empowerment: ConstitutionMaker offers three feedback options: kudos for positive response, critique for negative aspects, and rewrite for direct modifications. Each feedback type automatically generates a principle that's incorporated into the chatbot’s prompt.

Efficient Customization: A study involving 14 industry professionals found that ConstitutionMaker facilitated more efficient and effective principle writing, reducing mental strain and improving chatbot guidance.

Innovation in Interaction: ConstitutionMaker represents a significant stride in interactive AI, providing a platform for users to intuitively critique and improve AI outputs, ensuring that chatbots align more closely with user expectations and needs.

TCP Troubles: Silent Servers and Data Dilemmas

Navigating TCP (Transmission Control Protocol) communications can be tricky, especially when dealing with unresponsive servers. This article delves into managing such scenarios effectively, particularly when the server fails to acknowledge sent data.

The Silent Server Scenario: Using Ruby code examples, it illustrates a situation where an application continuously sends data over a TCP socket without receiving any server response. This raises a challenge: how to detect server unresponsiveness and prevent data loss.

Deep Dive into TCP Mechanics: The piece explains TCP flow and communication between the application and socket, emphasizing the importance of managing the TCP retransmission timeout.

Preventing Data Loss: The solution proposed involves setting a retransmission timeout using the TCP_USER_TIMEOUT socket option, allowing the application to detect unresponsive servers more quickly and prevent data loss.

Constructing a sample TCP server and client using Ruby. This will allow us to observe the communication process in action

server.rb:

# server.rb

require 'socket'

require 'time'

$stdout.sync = true

puts 'starting tcp server...'

server = TCPServer.new(1234)

puts 'started tcp server on port 1234'

loop do

Thread.start(server.accept) do |client|

puts 'new client'

while (message = client.gets)

puts "#{Time.now}]: #{message.chomp}"

end

client.close

end

endAnd the client.rb:

require 'socket'

require 'time'

$stdout.sync = true

socket = Socket.tcp('server', 1234)

loop do

puts "sending message to the socket at #{Time.now}"

socket.write "Hello from client\n"

sleep 1

endEncapsulate this setup in containers using this Dockerfile:

FROM ruby:2.7

RUN apt-get update && apt-get install -y tcpdump

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy the current directory contents into the container at /usr/src/app

COPY . .and docker-compose.yml:

version: '3'

services:

server:

build:

context: .

dockerfile: Dockerfile

command: ruby server.rb

volumes:

- .:/usr/src/app

ports:

- "1234:1234"

healthcheck:

test: ["CMD", "sh", "-c", "nc -z localhost 1234"]

interval: 1s

timeout: 1s

retries: 2

networks:

- net

client:

build:

context: .

dockerfile: Dockerfile

command: ruby client.rb

volumes:

- .:/usr/src/app

- ./data:/data

depends_on:

- server

networks:

- net

networks:

net:Now, we can easily run this with docker compose up and see in logs how the client sends the message and the server receives it:

$ docker compose up

[+] Running 2/0

⠿ Container tcp_tests-server-1 Created 0.0s

⠿ Container tcp_tests-client-1 Created 0.0s

Attaching to tcp_tests-client-1, tcp_tests-server-1

tcp_tests-server-1 | starting tcp server...

tcp_tests-server-1 | started tcp server on port 1234

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:08 +0000

tcp_tests-server-1 | new client

tcp_tests-server-1 | 2024-01-14 08:59:08 +0000]: Hello from client

tcp_tests-server-1 | 2024-01-14 08:59:09 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:09 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:10 +0000

tcp_tests-server-1 | 2024-01-14 08:59:10 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:11 +0000

tcp_tests-server-1 | 2024-01-14 08:59:11 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:12 +0000

tcp_tests-server-1 | 2024-01-14 08:59:12 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-14 08:59:13 +0000The situation gets more interesting when we simulate a server failure for an active connection.

We do this using docker compose stop server:

tcp_tests-server-1 | 2024-01-14 09:04:23 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:24 +0000

tcp_tests-server-1 | 2024-01-14 09:04:24 +0000]: Hello from client

tcp_tests-server-1 exited with code 1

tcp_tests-server-1 exited with code 0

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:25 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:26 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:27 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:28 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:29 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:30 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:31 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:04:32 +0000We observe that the server is now offline, yet the client behaves as if the connection is still active, continuing to send data to the socket without hesitation.

This leads me to question why this occurs. Logically, the client should detect the server's downtime within a short span, possibly seconds, as TCP fails to receive acknowledgments for its packets, prompting a connection closure.

However, the actual outcome diverged from this expectation:

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:11 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:12 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:13 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:14 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:15 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:16 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:17 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:18 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:19 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:20 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:21 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:22 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-14 09:20:23 +0000

tcp_tests-client-1 | client.rb:11:in `write': No route to host (Errno::EHOSTUNREACH)

tcp_tests-client-1 | from client.rb:11:in `block in '

tcp_tests-client-1 | from client.rb:9:in `loop'

tcp_tests-client-1 | from client.rb:9:in `'

tcp_tests-client-1 exited with code 1 In reality, the client may remain unaware of the disrupted connection for as long as 15 minutes

What causes this delay in detection? Let's delve deeper to understand the reasons.

In-Depth: TCP Communication Mechanics

To fully cover this case, let's first revisit the basic principles, followed by an examination of how the client transmits data over TCP.

TCP basics

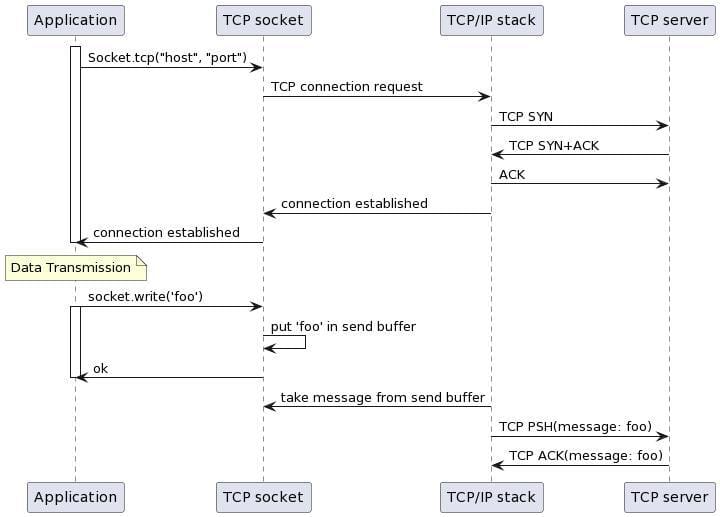

Here is the basic diagram illustrating the TCP flow:

Once a connection is established, the transmission of each message typically involves two key steps:

The client sends the message, marked with the PSH (Push) flag.

The server acknowledges receipt by sending back an ACK (Acknowledgment) response.

Communication Between the Application and Socket

Below is a simplified sequence diagram illustrating the opening of a TCP socket by an application and the subsequent data transmission through it:

The application makes two operations:

Opening a TCP socket

Sending data to the open socket

For instance, when opening a TCP socket, as done with Ruby's Socket.tcp(host, port) command, the system synchronously creates a socket using the socket(2) system call and then establishes a connection via the connect(2) system call.

As for sending data, using a command like socket.write('foo') in an application primarily places the message into the socket's send buffer. It then returns the count of bytes that were successfully queued. The actual transmission of this data over the network to the destination host is managed asynchronously by the TCP/IP stack.

This means that when an application writes to the socket, it isn't directly involved in the network operations and might not know in real-time if the connection is still active. The only confirmation it receives is that the message has been successfully added to the TCP send buffer.

What Happens When the TCP Server Goes Down?

As the server does not respond with an ACK flag, our TCP stack will initiate a retransmission of the last unacknowledged packet:

The interesting thing here is that by default TCP makes 15 retransmissions with exponential backoff which results in almost 15 minutes of retries.

You can check how many retries are set on your host:

$ sysctl net.ipv4.tcp_retries2

net.ipv4.tcp_retries2 = 15After diving into docs, it’s becoming clear; ip-sysctl.txt documentation says:

The default value of 15 yields a hypothetical timeout of 924.6 seconds and is a lower bound for the effective timeout. TCP will effectively time out at the first RTO which exceeds the hypothetical timeout.

During this period, the local TCP socket is alive and accepts data. When all retries are made, the socket is closed, and the application receives an error on an attempt to send anything to the socket.

Why Is It Usually Not an Issue?

The scenario where the TCP server unexpectedly goes down without sending FIN or RST TCP flags, or when there are connectivity issues, is quite common. So why do such situations often go unnoticed?

Because, in most cases, the server responds with some response on the application level. For example, the HTTP protocol requires the server to respond to every request. Basically, when you have code like connection.get, it makes two main operations:

Writes your payload to the TCP socket's send buffer.

From this point onward, the operating system's TCP stack takes responsibility for reliably delivering these packets to the remote server with TCP guarantees.

Waiting for a response in a TCP receiving buffer from the remote server. Typically, applications use nonblocking reads from the receiving buffer of the same TCP socket.

This approach simplifies matters considerably because, in such cases, we can easily set a timeout on the application level and close the socket if there is no response from the server within a defined time frame.

However, when we don't expect any response from the server (except TCP acknowledgments), it becomes less straightforward to determine the connection's status from the application level

The Impact of Long TCP Retransmissions

So far, we've established the following:

The application opens a TCP socket and regularly writes data to it.

At some point, the TCP server goes down without even sending an RST packet, and the TCP stack of the sender begins retransmitting the last unacknowledged packet.

All other packets written to that socket are queued in the socket’s send buffer.

By default, the TCP stack attempts to retransmit the unacknowledged packet 15 times, employing exponential backoff, resulting in a duration of approximately 924.6 seconds (about 15 minutes).

During this 15-minute period, the local TCP socket remains open, and the application continues to write data to it until the send buffer is full (which typically has a limited capacity, often just a few megabytes). When the socket is eventually marked as closed after all retransmissions, all the data in the send buffer is lost.

This is because the application is no longer responsible for it after writing to the send buffer, and the operating system simply discards this data.

The application can only detect that the connection is broken when the send buffer of the TCP socket becomes full. In such cases, attempting to write to the socket will block the main thread of the application, allowing it to handle the situation.

However, the effectiveness of this detection method depends on the size of the data being sent.

For instance, if the application sends only a few bytes, such as metrics, it may not fully fill the send buffer within this 15-minute timeframe.

So, how can one implement a mechanism to close a connection with an explicitly set timeout when the TCP server is down to avoid 15 minutes of retransmissions and losing of data during this period?

TCP Retransmissions Timeout Using the Socket Option

In private networks, extensive retransmissions aren't typically necessary, and it's possible to configure the TCP stack to attempt only a limited number of retries. However, this configuration applies globally to the entire node. Since multiple applications often run on the same node, altering this default value can lead to unexpected side effects.

A more precise approach is to set a retransmission timeout exclusively for our socket using the TCP_USER_TIMEOUT socket option. By employing this option, the TCP stack will automatically close the socket if retransmissions do not succeed within the specified timeout, regardless of the maximum number of TCP retransmissions set globally.

On the application level, this results in an error being received upon attempting to write data to a closed socket, allowing for proper data loss prevention handling.

Let’s set this socket option in client.rb:

require 'socket'

require 'time'

$stdout.sync = true

socket = Socket.tcp('server', 1234)

# set 5 seconds restransmissions timeout

socket.setsockopt(Socket::IPPROTO_TCP, Socket::TCP_USER_TIMEOUT, 5000)

loop do

puts "sending message to the socket at #{Time.now}"

socket.write "Hello from client\n"

sleep 1

endNow, start everything again with docket compose up, and at some point, let’s stop the server again with docker compose stop server:

$ docker compose up

[+] Running 2/0

⠿ Container tcp_tests-server-1 Created 0.0s

⠿ Container tcp_tests-client-1 Created 0.0s

Attaching to tcp_tests-client-1, tcp_tests-server-1

tcp_tests-server-1 | starting tcp server...

tcp_tests-server-1 | started tcp server on port 1234

tcp_tests-server-1 | new client

tcp_tests-server-1 | 2024-01-20 12:37:38 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:38 +0000

tcp_tests-server-1 | 2024-01-20 12:37:39 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:39 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:40 +0000

tcp_tests-server-1 | 2024-01-20 12:37:40 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:41 +0000

tcp_tests-server-1 | 2024-01-20 12:37:41 +0000]: Hello from client

tcp_tests-server-1 | 2024-01-20 12:37:42 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:42 +0000

tcp_tests-server-1 | 2024-01-20 12:37:43 +0000]: Hello from client

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:43 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:44 +0000

tcp_tests-server-1 | 2024-01-20 12:37:44 +0000]: Hello from client

tcp_tests-server-1 exited with code 1

tcp_tests-server-1 exited with code 0

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:45 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:46 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:47 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:48 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:49 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:50 +0000

tcp_tests-client-1 | sending message to the socket at 2024-01-20 12:37:51 +0000

tcp_tests-client-1 | client.rb:11:in `write': Connection timed out (Errno::ETIMEDOUT)

tcp_tests-client-1 | from client.rb:11:in `block in '

tcp_tests-client-1 | from client.rb:9:in `loop'

tcp_tests-client-1 | from client.rb:9:in `'

tcp_tests-client-1 exited with code 1 At ~12:37:45, I stopped the server, and we saw that the client got Errno::ETIMEDOUT almost in 5 seconds, great.

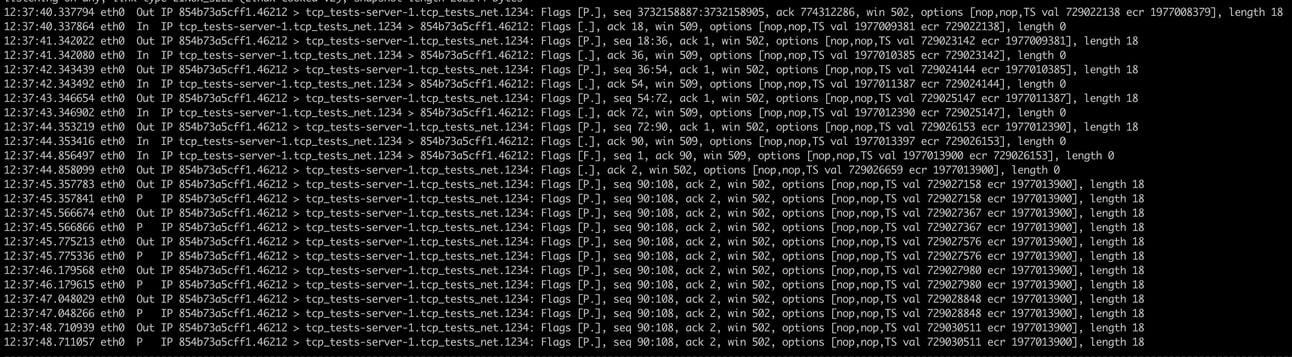

Let’s capture a tcpdump with docker exec -it tcp_tests-client-1 tcpdump -i any tcp port 1234:

It's worth noting that the timeout actually occurs in a bit more than 5 seconds. This is because the check for exceeding TCP_USER_TIMEOUT happens at the next retry. When the TCP/IP stack detects that the timeout has been exceeded, it marks the socket as closed, and our application receives the Errno::ETIMEDOUT error

Also, if you are using TCP keepalives, I recommend checking out this article from Cloudflare. It covers the nuances of using TCP_USER_TIMEOUT in conjunction with TCP keepalives.